At the foot of the Arenal volcano in Costa Rica

I made it back from Costa Rica!

In last week’s newsletter, I shared that I was furiously writing newsletters the night before I left on this school spring break tour with my daughter (high school junior).

It was incredible. We hiked. We toured coffee and chocolate farms. We ziplined through the jungle. We sampled authentic Costa Rican food. (And I came home with LOTS of great local coffees.)

I also did my absolute best not to think about work — about teaching, about AI, about the emails accumulating in my inbox — for a whole week.

We got back on Monday (well, at 2am on Tuesday morning).

My daughter went back to school on Tuesday (and survived!).

And on Tuesday morning, I made some of that Costa Rican coffee, rolled up my sleeves, and started digging through a huge pile of emails in my inbox.

Now that I’m back, I was reminded of a recent conversation with a friend about students and AI. It made me think about what I would tell students about AI if given the opportunity …

… so I made a list below. And I’d love for you to add to the list in the poll!

In this week’s newsletter:

🗳 Poll: More student AI maxims

✅ 10 maxims for students about AI

🗳 Poll: More student AI maxims

I’ll gather your responses to this week’s poll and share them in next week’s newsletter!

Instructions:

Please vote on this week’s poll. It just takes a click!

Optional: Explain your vote / provide context / add details in a comment afterward.

Optional: Include your name in your comment so I can credit you if I use your response.

Would you add anything to the 10 maxims for students about AI?

✅ 10 maxims for students about AI

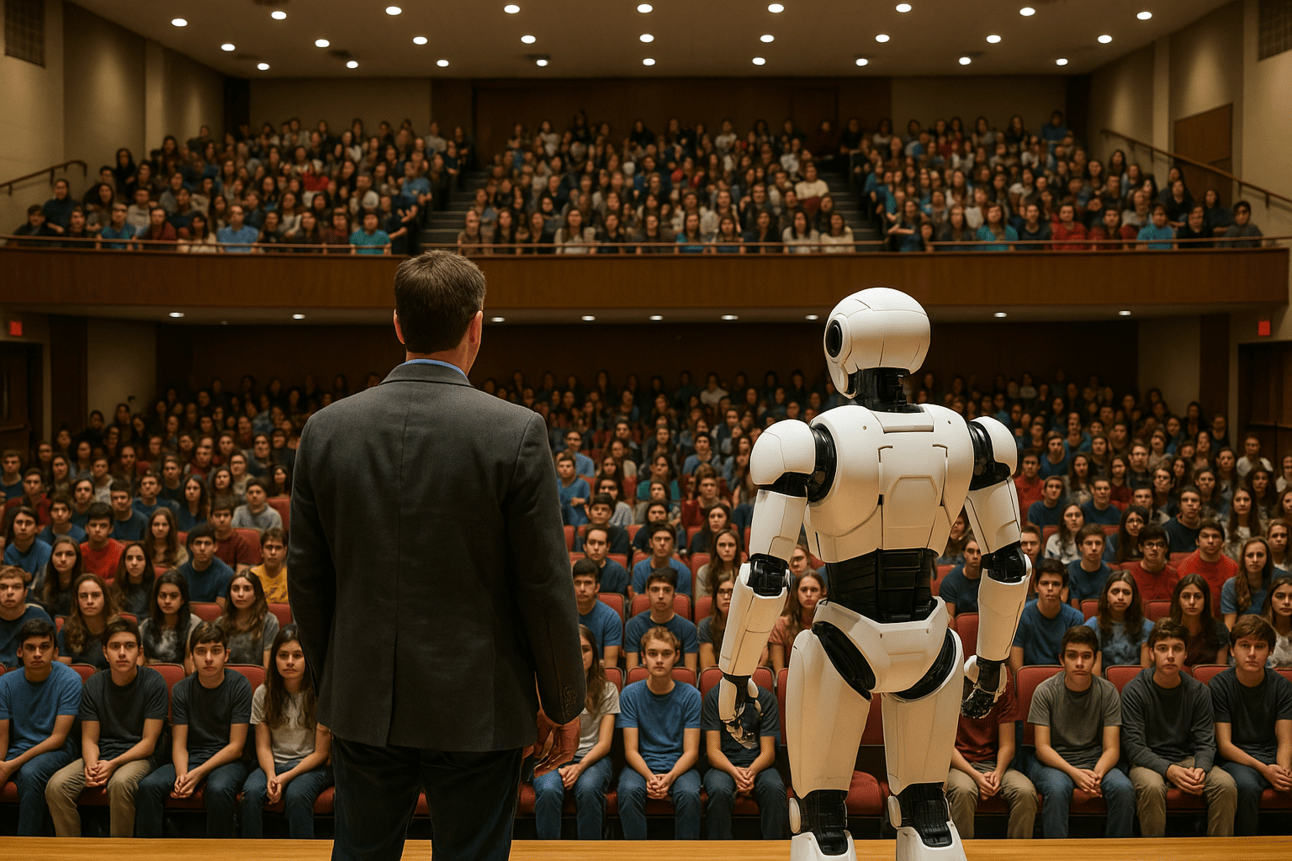

Image created by DALL-E inside ChatGPT

At a recent teacher conference, I had lunch with a friend and we started talking about AI in education. (No surprise there … we both write and speak about AI a lot in our work — and we have our strongly held thoughts and opinions about it.)

She asked me — more to strike up a conversation between us — what essential things about AI that students should know.

Of course, it made me think about my own kids — a college freshman, a high school junior, and a high school freshman. What do I want to make sure that they know? What should we talk about when it comes to AI and their learning — and their future lives?

We’ve been talking about AI literacies a lot over the last couple months. In case you missed it, here was our three-part series on the ACE Framework for AI literacy …

And now, I wanted to take a moment to apply that to a list of 10 maxims I think students should know about AI. (10 suggestions? Ideas? Rules? Something like that …)

But, if given 30 minutes with a group of students and I had to share the things I thought were most important, this is the list I came up with today.

This isn’t an exhaustive list. (And if I’m being honest, if you asked me tomorrow or next week, my list might be different.)

I also went back through previous AI for Admins reader polls and found some insightful comments from readers like you! They’re in italics.

I’m very curious to see what you would add to this list. Please do so in a comment in the poll above!

1. AI and search engines aren’t the same.

Students just assume that the internet is a question-answering machine. They’ll go somewhere. Type in the question they want answered. Look at the first result. Then, they’ll just assume that it’s right — so they can move on to the next thing.

The problem? “I found it on the internet” or “I found it online” can mean lots of different things …

Search engines index pages on the web based on an algorithm that delivers you what it thinks you’re looking for.

Even search engines can be gamed! It’s called “search engine optimization” and companies can buy their way to the top of an internet search — even if their content isn’t accurate.

Large language models (LLMs) like ChatGPT are like enormous auto-complete machines. They predict the next word (or token) in a sentence. They’re getting better, but they’re not really built for precision accuracy.

We need to go back to some of the maxims I was taught in newspaper newsrooms. Get your facts but then verify them. The more important they are, the more places you check them. And my favorite: “Even if your mother tells you it’s true, check it out.”

“Students are using AI as another googling tool and do not understand anything about how it works or what it can do, not to mention the ethical concerns that are associated with it.”

“Shout it from the rooftops - AI is not the same as a Google search! If you want to find the answer to a current events question, Google it. If you want to generate new content, use AI. And even that isn't necessarily always true. Some (most) Wikipedia articles are going to be more trustworthy than an answer generated by AI. And with Wikipedia, I can check the sources. I don't always get that option with an AI generated answer.” — Mickie Mueller

2. Accuracy is an issue with AI.

Large language models (LLMs) are getting better. The hallucinations (where AI says something is accurate but it’s not) are happening less and less.

But just because we have less absurdities like “the sky is green” or “bananas are vegetables” doesn’t mean that accuracy isn’t still an issue with AI.

Just think about textbooks. There’s that saying that people who win wars get to write their version of history. Social media — and even news media corporations — have twisted views of the truth. AI works on the data it’s given.

As we move forward with AI, instead of asking “is it making mistakes?” we should be asking “what is true — and right — and fair?”

“AI does not exist in a bubble, external entities are influencing the information given to AI--unintentionally or maliciously--it doesn't matter. This means that AI can be inherently bias and unable to recognize that fact. Finding reliable information that represents multiple viewpoints takes more work now than it ever has.”

3. AI doesn’t think like a human does.

How do AI models sift through all of their training and provide responses? In general, they’re trying to do a few things …

Look for the strongest connections between lots and lots of ideas

Predict the next token/word in a sentence

Make their best statistical guess about what we want

Make generalizations based on tons and tons of data

It’s like someone who says, “I’ve read a lot of books, and based on what I’ve read, here’s the conclusion I’ve come to.” That’s helpful in some situations, but not always.

When we better understand how AI works, we better understand its limitations — and how we should navigate in light of those limitations.

4. But in some ways, AI does think like a human does.

The neural networks that AI models use are based on the architecture and function of our own human brains.

Nodes and synapses. The more our brain uses a pathway between two points, the stronger it becomes — and the easier it is to use it again.

We humans are algorithmic, formulaic thinkers — in a way, similar to AI. We follow certain patterns. We make judgments about the world around us based on our experience. The more we’ve seen something, the more likely we are to apply that understanding globally to the world around us.

AI is, as Ethan Mollick puts it, an alien intelligence. But in many ways, it still mimics our own intelligence.

5. Be careful with info you give to an AI model.

Lots of us just don’t seem to care about data privacy and protecting our personally identifying information (PII).

But when we feed it to ChatGPT for instance, we really don’t know where — or how — or when — it could resurface in the billions of messages it writes every single day. Even the AI developers don’t fully understand how AI models come up with the responses they do. It’s unpredictable, and when we feed our precious personal information to an AI model, we have no idea how it could backfire.

“We as teachers need to be educating our students on the inaccuracies and biases of AI. However, I don't know that we are warning students enough about providing personal information (PII) in their searches. It worries me that our students are providing personal information that is training an LLM.” — Simone

6. Always question the response an AI model gives you.

Imagine meeting someone at a conference … or at a bar … or sitting by you at your kid’s sporting event. You strike up a conversation and it starts to get weird. Or slanted or twisted. And you start to wonder …

“Where did this person get their facts? I don’t think those facts are actually facts. Are they intentionally trying to deceive me? Does this person actually believe what’s coming out of their mouth?”

Your red flags start going up.

That’s an important reflex for us to have as humans. It’s natural when we talk to another human. But not everyone has developed it with AI.

This one can be easy to develop in students in the classroom. Put an AI response about something up on the big screen in class and ask students to poke holes in it, to ask follow-up questions. If they can’t, then model it — until they’re able to do it, too.

“Students tend to believe that AI is factual. But it is filled with the bias of present day society, since it gets its facts from what has been mined. We need to help them determine how the information they get fits with reality, as they understand it.” — Vaughn

7. “How much?” is a better question than “did you use it?”.

Lots of teachers and students seem to default to a very binary, black-and-white, yes-or-no question with AI and academic integrity.

“Did you use AI?”

It’s a vague question that can be interpreted so many ways, much like when you ask a student, “Did anyone help you do this?” or “Did you even think when you did this?”

Instead of asking the binary yes/no question, let’s get into the nuance and the details.

In what ways did AI assist you?

Did it help you on the word level — sentence level — paragraph level — big picture ideas level?

What thinking did you bring to the table — and what did AI bring?

How did AI support your learning and development?

The more that we have these conversations, the more that we normalize student thinking about the role that AI plays in their learning. And THAT is AI literacy (at least one part of it).

8. AI should augment human thinking, not replace it.

Especially in schools, I’m a firm believer that human thought comes before AI assistance. Do your best. Then level up — the thinking, the execution, the grammar, whatever — with AI.

The truth is that AI can serve as a great supplement, a side tutor, an augmentation to the instruction and work that students do. However, when we try too often to punish students for misusing it, it gets a stigma as something bad. But it can be of great benefit if students know how to use it. If we really want to develop the lifelong learners we talk about in school mission statements, this has to be part of it.

“I think it is important that they know that generative AI is not generating brand new creative content, but it is just repurposing what already exists based on patterns. Without the human brain developing and expressing creativity, we won't continue to grow and develop.” — Nathan De Groot

9. Use today to think about how AI should shape your future work.

It’s easy for us to get fixated on today. The state test we have to prepare students for this year. The standards we have to meet in this chapter. They’re all “today issues.”

But as students go through today, they’re also slowly preparing themselves for the work they’ll do as adults. We get the opportunity to help them think about how AI — and technology as a whole — should and shouldn’t impact their work. We can have those conversations about HOW they do the work, the process. It doesn’t have to be about grammar mistakes or misunderstandings of the content or procedural things all the time.

Let’s step back and ask students: “Here’s a way to do this … SHOULD we do it this way? Why or why not? What’s the RIGHT way to do this … and how do we know?”

“I think the misuse or over-reliance on AI can pose a serious threat to maintaining our unique human ability to think critically and creatively. If we don't want the machine learning to replace human intelligence than we can't have it do some of our thinking then just present or share that out without our own voice. If we aren't careful it can stunt our own critical thinking.” — Chad Sussex

10. Don’t forget why we are here.

For so long, in schools, we have become used to what school is. What we do in school. The day-to-day routine.

So we just assign things to students. And we ask them to do it. And they do. (Most of them anyway.) The school world just keeps spinning — and sometimes, students don’t even know why.

In previous years, if grades were important to students, they would comply — doing the work we ask them to do even if they don’t know why. (And even if we don’t exactly know why.)

As AI becomes a bigger and bigger part of the world, it’ll become easier for students NOT to do the work they don’t know why they’re doing.

As teachers, we need to help them see why they’re doing it — why we are asking them to do it. And we’re going to have to take a very critical eye toward what we’re asking them to do — and why we’re asking them — and whether it’s really worth doing or not.

Maybe you’ve seen this beautiful poem by Joseph Fasano called “For a Student Who Used AI to Write a Paper.” It got me thinking about reminding students about why we do the work we’re doing. The line — “But what are you trying to be free of?” — keeps echoing in my mind.

For a Studen Who Used AI to Write a Paper by Joseph Fasano

“However, in the classroom, I think the "solving" of the issue of AI and academic integrity is going to involve changing the way we think about classwork. Many people, including you, Matt, have talked about going back to the "why" of assignments. Why do we assign an essay? Why do we assign comprehension questions? What is the value in those things? What can we do to make sure the student is answering? Is assigning a question that the student can type into Google to find the answer any different than assigning an essay that an AI can write? I think it is going to be hard, because as humans we so often find ourselves in the more-travelled path that is easier because we have always done it that way. I think that AI was just a kick in the pants to let us know that this is the time to change education and how we measure success in a classroom.” — L. Bolin

I hope you enjoy these resources — and I hope they support you in your work!

Please always feel free to share what’s working for you — or how we can improve this community.

Matt Miller

Host, AI for Admins

Educator, Author, Speaker, Podcaster

[email protected]