You’re receiving this email on Wednesday, but I’m writing it on Saturday.

Saturday night, to be exact. At about 9:00pm Eastern time. That’s what time it’ll be when I wrap this up.

This is significant because in about five hours, I have to wake up (at 2:00am!) to head to my daughter’s high school. We’re going on an 8-day school trip to Costa Rica.

(And I’m so incredibly excited! 🎉)

One of my last work tasks before I clock out, disconnect and go on this trip?

To write this email newsletter for you.

Here’s what happened …

I got on a ROLL with a very simple idea that I think is very powerful in our quest to get the AI and learning relationship right. (It’s below. It went longer than I imagined!)

And I ran out of time to do our poll — and any of the other content I put at the end of the newsletter!

So forgive me for a bit of an abbreviated newsletter this week. (Actually, because the main article is kind of long, I guess in a way it’s not really that abbreviated after all.)

If this big idea resonates with you, would you hit reply and tell me? (I might not see it until I’m back from Costa Rica, but I’d really like to see it!)

In this week’s newsletter:

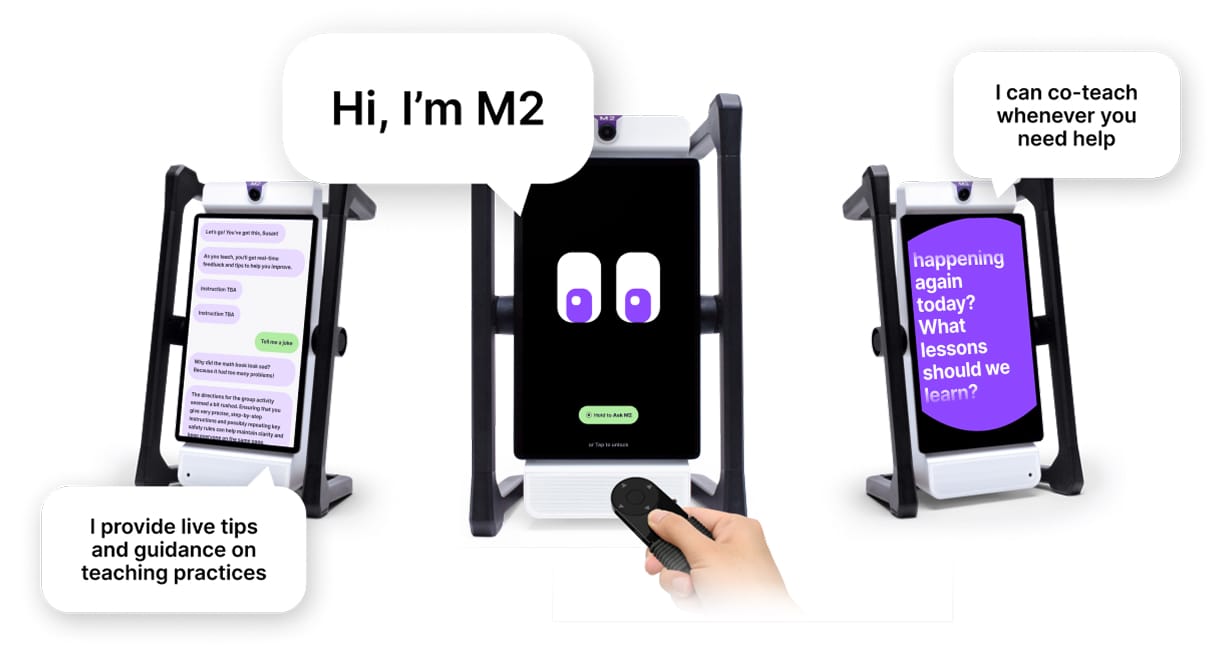

👋 Meet M2: the intelligent co-teacher

💡 A simple idea to power learning with AI

👋 Meet M2: the intelligent co-teacher

What if there was a way to leverage AI while keeping you, the teacher, front and center? What if you had an effortless way to lead engaging lessons and push students to think deeper?

Now there is. Let me introduce myself. I’m M2: the intelligent co-teacher.

I can help you enhance learning without gluing students to screens. I sit on the sideline, following along silently. Then, when needed, I can be called in with a tap and a voice command:

How do I do this?

💡 Live Teaching Tips: I provide instant AI suggestions tailored to your objective—no coach, no time-consuming video reviews. No gotchas.

🧑🏫 Real-Time Classroom Support: Ask me (“ask M2”) for help anytime during class. You can ask me to “Explain that,” “Translate that”, “Ask me a question,” “Tell me a joke” and more to keep modern learners engaged.

💬 Personalized Feedback: At the end of each session, I’ll give you insights on your engagement, questioning, and pacing—along with pre-built MirrorTalk reflections for students and teachers!

Get a free annual subscription with your first purchase of M2 with this referral code: M2DT2025

💡 A simple idea to power learning with AI

AI image generated by Microsoft Designer

As the conversation about AI in education grows, I often like to stop and think about how I use AI in my own work.

I spend a lot of time brainstorming and creating resources to help teachers — through my workshops, my books, and newsletters.

In a recent Ditch That Textbook newsletter about ways to use AI to teach, I talked about a maxim I’ve been using in my own AI use …

The MORE important it is, the LESS I lean on AI.

The LESS important it is, the MORE I lean on AI.

Writing my next book? That’s important. I’ll put my full human creative forces to work.

Writing a bio about myself that no one will probably read? Sure, ChatGPT can do that. (I did this recently!)

It goes back to our recent short series on student attention (part 1 / part 2). It’s finite — like money. If we only have so many “attention dollars” to spend in a day, we get to choose what we will spend them on.

I choose to spend mine on what I deem most important.

Adults ≠ students with AI

This line of thinking, however, is for adults … at least in my opening.

Here’s why:

Adults have developed the human skills that young students are still trying to develop.

Students sometimes have flawed judgment on what’s “important.”

The activities we have students do will only develop their minds and skills if they actually do the work themselves.

Of course, “do the work themselves” is also subjective. What does “do the work themselves” actually mean? I truly believe that students can be supported in their academic work by AI in ways that still develop them as humans.

Therefore, we need some basic guiding principles to help us guide students — and design learning spaces and learning activities.

I’ve been thinking a lot lately about what those guiding principles could be.

Here’s one that guides my own professional work — and can also guide students in their academic work.

Human thinking before AI.

It sounds a little too simple maybe. But the more I think about it, the more I like it.

Not everyone seems to think this way. Guy Kawasaki is one of them.

I attended the FETC Conference in Orlando, Florida, and Guy was one of the keynote speakers. Back in the 80’s, he was responsible for marketing the Macintosh computer line (a la Mac and Apple products today).

In his keynote, he told us that he uses AI all the time and encouraged AI use in the classroom, too. “OK,” I thought … “I see where you’re going.”

I didn’t see the next twist.

He proceeded to tell us that when he needs to write something, he has AI write a draft for him. Then he just adjusts and edits it.

Ohhhh …

Swing and a miss.

At least if you’re speaking to a K-12 educator audience. (And he was.)

As an adult, I support that. I’ve done it myself! It goes back to original maxim I shared before …

The MORE important it is, the LESS I lean on AI.

The LESS important it is, the MORE I lean on AI.

If whatever Guy is writing just needs to get done — something that, in his eyes, isn’t as important — then sure, let AI compose that first draft.

But if it’s important? If he wants it to have impact?

AI often shoots for the average, the statistical mean, the most common answer — so that it has its best chance of being right.

If we want to have an impact, shooting for the statistical mean isn’t going to move the needle. It’s like they say: “If you’re writing for everyone, then you’re writing for no one.”

And the other side of this: Guy is an adult with LOTS of well developed skills. When students exert that “productive struggle,” those “desirable difficulties,” they improve as humans.

It happens when the human thinking happens — and it happens before using AI.

Examples of human thinking before AI

Example 1: Student does what teachers fear the most with AI. The “prompt and paste.” Copy the assignment. Feed it to ChatGPT. Copy/paste the answer. Submit it to the teacher.

AI comes before the human thinking. (Actually, the human thinking never really exists with this example assignment.)

Student doesn’t develop any thinking, knowledge, or skills.

Example 2: The “Be the Bot” activity (a favorite of mine to do with teachers during PD workshops on AI). Tell your students that you’ll ask ChatGPT to make a judgment based on something they’ve studied (what a character should have done next … what the top three most important historical innovations are). Students have to guess what AI is going to say.

AI is a part of the assignment, but not until AFTER the student (human) thinking.

AI is used as an example that students can compare/contrast to their original human thought.

It also provides opportunity to do critical thinking (did the AI get it right? what do I agree/disagree with?).

By looking critically at AI, students are also learning an AI literacy — always critique what AI gives you. An embedded AI literacy lesson (whether the teacher intended it or not).

Example 3: AI feedback on student work. Student writes about something. An AI model analyzes it and provides feedback.

Human thinking comes first here … but it all depends on how hard the student worked on the first draft. Did they throw together a sloppy, quick draft so AI could clean it up? Or did they do their best work — with refinement with AI?

Thinking can be increased with a round of student reflection before submitting the work. How did your writing go? What did you do well? What could you have improved on? What was hard, and how did you navigate it?

So, how do we do this?

How do we make this happen — this “human thinking before AI” idea?

You might be thinking, “You know, Matt … lots of students already think that AI assistants like ChatGPT are homework completion machines. They won’t even think about doing human thinking first.”

One way we WON’T be able to do this, I believe, is through coerced compliance.

We can’t bully students into doing human thinking before AI.

Why? For one, because it’s becoming nearly impossible for us to judge whether human thinking has happened first. Coerced compliance implies that you have proof that students aren’t doing what you want — and the consequences are enough to change those students’ minds.

The tables have turned. We just don’t have that concrete proof. (And, personally, I’m not convinced that coerced compliance was really all that effective in the first place.)

In short: bullying the “cheaters” into not cheating just won’t work.

So … let’s get back to the question …

How do we do this?

Here are some ideas …

Design it into learning. If your students have some sort of school-endorsed AI platform, put human thinking into the design of the assignment/activity BEFORE using AI. But then, design AI into the learning AFTER the human thinking so students see how to use it responsibly.

Model responsible AI use. Not all students follow the lead of their teachers. But lots of them do. There’s a good chance that YOU had a teacher that inspired you to be your best self. When students see a trusted adult using AI responsibly — and talking about why they don’t use it irresponsibly — it’ll help some of them know what to do. And when some students start using it responsibly, it starts to change the culture of how this technology gets used.

Make learning as relevant as possible — and help students to see that relevance. So often in education, students do work “because I said so.” Because it was assigned. Because we’re taking a grade for it. They’ll do it, but why? Coerced compliance. Not because they know why they want to — or they want to because it interests them. It’s because they have to. We have to at least TRY to help them see why they should do our work — why it’s relevant, why it might even be interesting. Sometimes we just skip that step. But in a world where it’s easier and easier for students to use AI to skip the human thinking, showing them the relevance is more important than ever.

This sounds a little too optimistic. Can’t students still cheat and abuse the system?

Yes. Absolutely they can.

And honestly, they still will.

Here’s the issue, though …

This was never about 100 percent compliance. It’s not about zero cheating and abuse.

(Honestly, before AI … if we’re being REALLY honest with ourselves … we never got zero cheating and abuse. We might not have seen it, but it was still happening.)

The way I see it, we have two options in the cheating and abuse situation …

We can try to punish the cheaters to bring cheating and abuse down to zero.

We can model and design for responsible use — and promote responsible use — at every opportunity, improving the culture of how this technology is used.

Will the first option work? I think we should always be on the lookout for academic dishonesty because we want the best for our students. So I don’t think we just quit talking to students about classwork that doesn’t look like it’s been done correctly.

But punishing the cheaters into compliance? I don’t think it’ll work.

AI detectors are horribly flawed.

Even if they did work, there’s zero nuance. What if the student used AI to help them with word choice and punctuation? Rewording a sentence? Recasting a paragraph? At what point is it considered “using AI” … and at what point is it irresponsible?

When it comes to the cat-and-mouse game of catching cheaters, we will always be three steps behind.

When students put more emphasis on not being caught, they put less (or no) emphasis on the actual learning. We forsake the very reason we show up to school in the first place.

So, if punishing the cheaters won’t work, what can we do?

Brace yourself. The starry eyed optimist in me is coming …

We show them how it SHOULD be done.

We spend more time trying to cast a vision of what appropriate AI use looks like — and less time trying to catch students that cheat.

Proactive instead of reactive.

If catching cheaters feels like beating our heads against the wall, what does the alternative look like?

To me, it looks like the tide. When the tide comes into a harbor, what happens?

All ships rise.

All of the energy and effort you’d need to exert to lift all of those ships happens naturally, effortlessly, when the tide comes in.

Sure, if we start focusing more on modeling what it SHOULD look like, we will have students that abuse the system.

But in the end, I’m convinced that we’ll be better off — and we’ll have a much more learning-centered, positive learning environment — if we emphasize responsible use.

Oh, and one more point …

We shouldn’t feel like we have to use AI for everything all the time. (Just like when we started getting laptops and Chromebooks in schools. We don’t have to use them all the time every time.)

It’s just like the modeling we talked about earlier. When the adult models that “hey, kids … we really don’t even need AI to do this, and let me tell you why” … that also starts to cast the vision. And build the culture.

Human thinking before AI.

It’ll create better thinkers. More developed human students.

It’ll start the tide rising in the harbor of your school and school district.

And in the end, we’ll show students how to get their relationship with AI right — as students and as adults.

I hope you enjoy these resources — and I hope they support you in your work!

Please always feel free to share what’s working for you — or how we can improve this community.

Matt Miller

Host, AI for Admins

Educator, Author, Speaker, Podcaster

[email protected]