AI bias is a hot topic — and it’s something we need to be aware of as we guide policy, instruction, and curriculum in our schools.

As I first started digging into AI, I had a hard time grasping this.

“I just need to see some examples to really understand this,” I remember thinking.

In a recent Bloomberg analysis, you can look right into the eyes of AI bias — and see the “average faces” that it creates for certain jobs.

These examples can make for great classroom discussions — and help guide our decision making as leaders.

Below, you’ll see graphics of the key findings — and how they apply to education.

Also: If you’re interested in the role of AI in assessment, you won’t want to miss the replay of an insightful webinar with Dr. Dylan William. Check it out here or see a quick summary below.

In this week’s newsletter:

✅ Poll: AI in education in 5 years

⚖️ AI bias and its impact on students

📺 Webinar: AI and student assessment

📚 New AI resources this week

✅ Poll: AI in education in 5 years

This week’s question: Think 5 years into the future. How will AI impact education most?

The results were a landslide for personalized AI tutors! We had 35 out of 60 votes for it.

Here’s what you had to say about how AI tutors will impact education the most in 5 years:

“I think it would be awesome if we could create personal tutors that can guide students on the learning path. For example, an AI tutor guiding a student using the Oxford Tutorial Method would be a huge advantage since you can't do that 'individually' in a class of 30 students.” — Martin Botha, South Africa

“I believe that individual learning with AI will be the go to in education in the future. I foresee personalizing learning to not only shorten learning gaps for students but also increase student engagement and interest with lesson content. It will free teachers of the lengthy task of lesson planning and elevate them to the role of educational coach, coaching students through their struggle points.” — Vicki

Some votes for “new ways of creation to show learning”:

“I think the only way to help teachers stress less about AI-generated assignment submissions is to change the way they asses the kids. What if the grade was about the prompts and not about the final answer?” — Matthew Skaggs

“This moves into the UDL and Grading for Equity conversations and will allow teachers to create more opportunities for voice and choice in the classroom.” — Erin

✅ This week’s poll

Please vote — and afterward, if you want, tell us more!

And type your name (optional) in your response. I’ll share (with credit!) a few of my favorite responses next week.

How concerned are you about bias in AI responses?

⚖️ AI bias and its impact on students

In AI workshops, I compare AI models (like those that drive ChatGPT) to a person who has lived in a library since birth.

(Odd comparison, I know … but go with me …)

If they’ve never set foot outside of the library, then all they know of the world is what they’ve read in the books in the library.

They would probably get lots of facts right by learning in this way.

But what they perceive about people — who does what, what they’re like, how they’re treated — could easily be skewed. They might come to conclude, based on reading novels in the library, that …

Women are “damsels in distress” or gossips

Men are “knights in shining armor” or money-hungry

Poor people are uneducated and lazy

The rich are corrupt and greedy

People of color are sidekicks, criminals, or “exotic foreigners”

Imagine how this person might interact with and treat others — based on those perceptions — if they left the library.

Even though people rarely state those stereotypes in print or out loud, they’re visible if you pay attention across lots of books, movies, and other media.

That’s bias. And it doesn’t just show up in books. It’s in artificial intelligence, too.

Racial and gender bias in AI images

Bloomberg recently published an analysis of AI images — Humans Are Biased. Generative AI is Even Worse.

In it, they analyzed 5,000 images created by Stable Difusion, an AI image generator.

The results, according to the article: “The world according to Stable Diffusion is run by White male CEOs. Women are rarely doctors, lawyers or judges. Men with dark skin commit crimes, while women with dark skin flip burgers.”

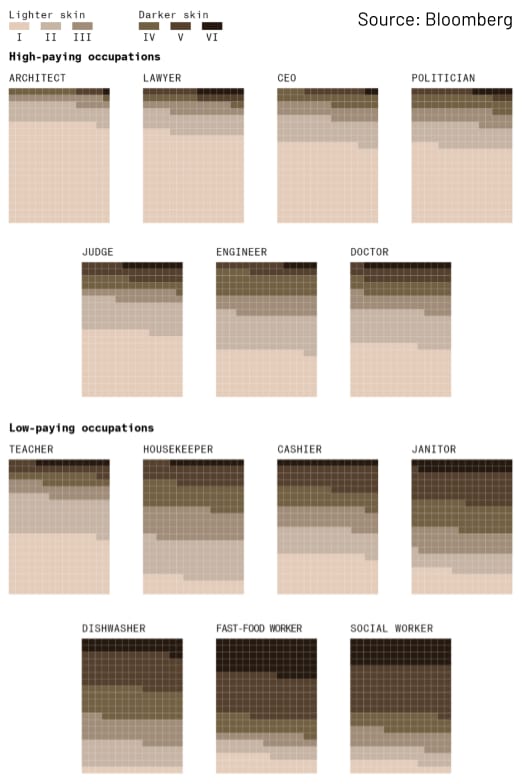

Example: Race and occupation

They generated 5,000 images of people with different professions and categorized them with the Fitzpatrick Skin Scale, a system used by dermatologists and researchers.

Here are the results:

As you can see, higher-paying occupations were predominantly depicted with lighter skin — while jobs like dishwasher, fast-food worker, and social worker were depicted with darker skin.

Example: Gender and occupation

It was a similar story for gender distribution in Bloomberg’s analysis: men were depicted for higher-paying jobs, while women (except for janitor) were depicted for lower-paying jobs.

Example: Gender and occupation

They aligned all of the faces under each occupation to create an “average face” … and those “average faces” line up with what we saw in the data.

What does this mean for education?

EdTech tools that use generative artificial intelligence are becoming more commonplace in schools and classrooms.

In our “library dweller” example above, this person starts to accept the generalizations and stereotypes from all of the published books as fact — whether they realized it or not.

It was what they saw — so they accepted it as reality.

It’s the same way for us in education. Our media — even our AI-generated media — can perpetuate stereotypes.

What can we do to combat it?

Make sure that students — and teachers — understand how AI-generated media works. It creates based on statistical significance — what it sees the most. It can be adjusted by developers, but what’s in its dataset is what it will tend toward — whether the data in that dataset is good and fair or not.

Ask explicitly for diversity in media. If teachers or students are generating media, they can ask AI for media that depicts people of different genders and races, bypassing the biases that might show up if they leave decisions up to the AI model.

Where else might we see this type of bias?

AI has biases against everything. Again, it operates based on statistical probability of generating what the user is looking for. So it can veer toward the statistical mean in other areas. Some criteria to watch for may include:

Age (young vs old)

Socioeconomic status (poor vs rich)

Location (urban vs rural)

Religious affiliation (and what’s appropriate for specific religions)

Appearance (physical attributes)

Disabilities

Cultures and nationalities

It can even show up in little, subtle areas that may seem innocuous but just further show that AI has biases in everything.

For example, I asked Google Gemini for the best coffees for brewing at home. Its responses skewed heavily toward dark roasts at popular coffee chains like Peet’s and Starbucks. (I personally tend toward light or medium roasts from independent coffee chains, so in this case, the AI bias on coffee doesn’t match up with my own preferences!)

Teachers might be careful to identify biases in how AI interprets and explains history and literature — and other subjects that are up for interpretation.

School leaders might be careful to identify preferences and values promoted through AI responses that don’t line up with their community’s values.

Where else might we be careful of AI bias showing up in our roles in education? Hit reply and let me know. I’ll share some of the responses in a future newsletter!

📺 Webinar: AI and student assessment

This webinar was really insightful when it comes to the role of AI in assessment. (It has already concluded and you can watch the recording on YouTube.)

Short Answer & The Stanford EdClub hosted Dr. Dylan William for this webinar conversation on AI and K12 assessment.

They discussed:

the validity and purpose of assesment

the promises and perils of AI feedback

the crucial role of the teacher

rethinking grades and homework in an AI world

intrinsic motivation of students with AI in the mix

📚 New AI resources this week

1️⃣ Summarize Anything with NotebookLM’s Podcast-style Audio (via Digital Learning Podcast): Hear how this free tool works, how it might be implemented in education, and how it points to the future of education.

2️⃣ Admissions Essays Align with Privileged Male Writing Patterns (via Neuroscience News): Researchers analyzed AI-generated and human-written college admissions essays, finding that AI-generated essays resemble those written by male students from privileged backgrounds.

3️⃣ Exploring Authorial Voice in the Age of AI (via Nick Potkalitsky): How does AI's text generation ability redefine authorial voice and reshape writing education and creativity?

I hope you enjoy these resources — and I hope they support you in your work!

Please always feel free to share what’s working for you — or how we can improve this community.

Matt Miller

Host, AI for Admins

Educator, Author, Speaker, Podcaster

[email protected]