When I started writing today’s newsletter, I knew the topic was important — developing unhealthy personal relationships with AI.

Now that I’m done writing, my thinking has shifted …

This is one of the most important topics I’ve written about in this newsletter.

All of the classwork stuff and policy stuff is important.

This is a topic that has life and death consequences — and it’s something that can easily impact any of our students’ lives.

I don’t usually say this, but …

Please read this one today. It’s important. (And then share your thoughts in the poll.)

In this week’s newsletter:

🗳 Poll: Unhealthy AI relationships

😞 Protecting kids from unhealthy AI relationships

🤷♂️ Beware of talking about AI in generalities

📚 New AI resources this week

🗳 Poll: Unhealthy AI relationships

This week’s question: Which is the biggest AI concern in your district?

This one was a landslide! (By the way, I do teacher training on AI. Email [email protected] for details.)

🟩🟩🟩🟩🟩🟩 Lack of teacher training (45)

⬜️⬜️⬜️⬜️⬜️⬜️ New forms of cyber attacks (2)

⬜️⬜️⬜️⬜️⬜️⬜️ Unreliable/biased AI training data (5)

⬜️⬜️⬜️⬜️⬜️⬜️ Job loss / tech will replace teachers (1)

⬜️⬜️⬜️⬜️⬜️⬜️ Artificial intelligence surpassing human intelligence (1)

Some of your responses:

Voted “Lack of teacher training”: As an ITF for 18 schools, I have only done ONE AI training. Admin, teachers, and other staff just want to push this aside (like it's not there) and use AI Detectors because "they are always right". Any time I speak with admin or a teacher concerning AI either, they listen to what I have to say about AI or they completely disregard AI in general. Most in our district are not open to change ... which makes AI conversation difficult.

Voted “Lack of teacher training”: Teacher training MUST also go beyond simply focusing on AI tools for teacher use (which is what I see so many schools/districts do)! Quality training must also include discussions regarding navigating ethical considerations, student use, academic integrity, etc. though teachers sometimes only want PD on the tools themselves. — Melanie Winstead

Voted “Lack of teacher training”: There are so many other things teachers are responsible for and trying to accommodate and the climate in teaching right now is tenuous, as teacher wellness is suffering. One more thing - being educated about AI - just may be the straw that breaks the camel's back.

Voted “Unreliable/biased AI training data”: I just had a meeting with our English teachers this morning. Their concern is students are not able to write a correct sentence without the use of AI. When they do use AI, they just click on it without reviewing the content AI distributed to them.

🗳 This week’s poll

How worried are you that students will develop unhealthy relationships with AI?

Although I’m curious about the poll numbers, I’m really doing this for your comments afterward. I’m very interested to hear from this group.

Instructions:

Please vote on this week’s poll. It just takes a click!

Optional: Explain your vote / provide context / add details in a comment afterward.

Optional: Include your name in your comment so I can credit you if I use your response.

How concerned are you about students developing unhealthy relationships with AI?

😞 Protecting kids from unhealthy AI relationships

Image created with Microsoft Designer

Some of us saw the warning signs. For others, it had to hit the news before they realized.

Unhealthy relationships between kids and artificial intelligence? It’s a threat.

In essence: the relationships we build and maintain with artificial intelligences can …

influence us into bad decisions

impact our human relationships

take us down a dark and twisty road that we might not be able to return from

Anthropomorphism — the attribution of human characteristics to something that’s not human — can create a dangerous psychological connection to AI for kids. (More on that later.)

Our students need us to teach them. To protect them. And to intervene.

Exhibit 1: The chatbot relationship

Sewell Setzer III, a 14 year old boy, developed a monthslong virtual emotional and sexual relationship with a chatbot created on the website Character.ai.

"It's words. It's like you're having a sexting conversation back and forth, except it's with an AI bot, but the AI bot is very human-like. It's responding just like a person would," his mother, Megan Garcia, said in an interview with CBS News. "In a child's mind, that is just like a conversation that they're having with another child or with a person."

He committed suicide … ending his life, the mother said, because he believed he could enter a virtual reality with her if he left this world. Now, the mother is suing Character.ai, claiming the product intentionally designed the product to be hypersexualized and marketed it to minors.

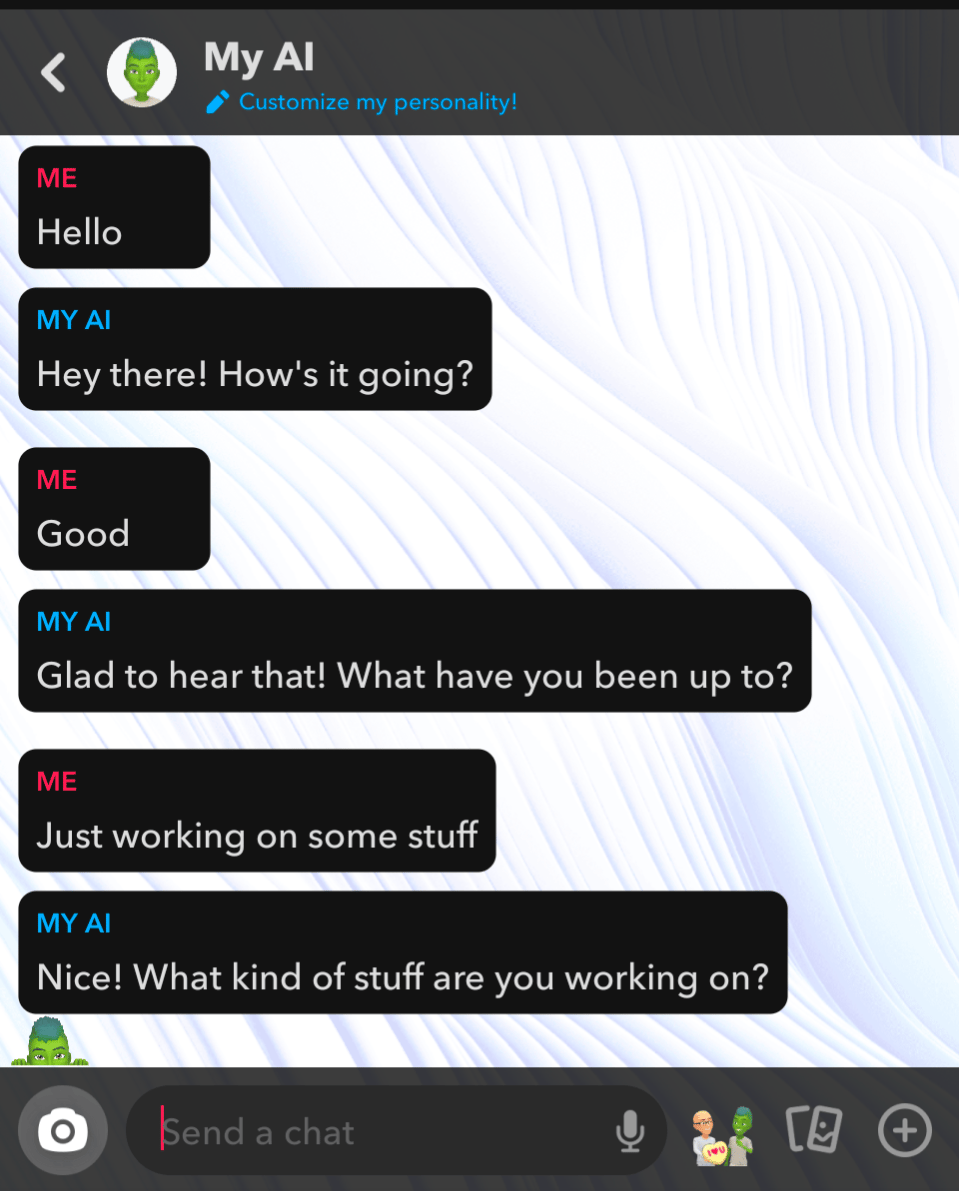

Exhibit 2: Snapchat’s My AI

The AI feature built into Snapchat — My AI — is easily accessible on a platform that so many children and teens access on a regular basis.

In a support article, Snapchat calls it “an experimental, friendly, chatbot” and says: “While My AI was programmed to abide by certain guidelines so the information it provides is not harmful (including avoiding responses that are violent, hateful, sexually explicit, or otherwise dangerous; and avoiding perpetuating harmful biases), it may not always be successful.”

However, because it’s built to be friendly, it’s easy for young users to misunderstand the blurred lines between reality and AI.

To make matters worse? The personal, psychological connection with AI is strengthened with My AI with …

the ability to name it …

Screenshot from Snapchat My AI

an avatar (aka an icon or figure to represent someone or something) styled to match your avatar to make it look human …

Screenshot from Snapchat My AI

natural human language in a conversational style

Screenshot from Snapchat My AI

the ability (with Snapchat+) to create a bio for the My AI character to influence how it interacts with you

Screenshot from Snapchat My AI

In essence, all of the ingredients are there for kids to create an AI boyfriend/girlfriend and develop a strong relationship with it.

Snapchat says in its support article: “We care deeply about the safety and wellbeing of our community and work to reflect those values in our products.” But when you’re creating a product like this, it feels a bit like setting a beer in front of an alcoholic but claiming to care deeply about them.

Exhibit 3: Buying — and wearing — an AI “friend”

Hang on. It’s about to get even creepier. Have you seen Friend? Just go to friend.com.

Screenshot from friend.com

It’s a pendant that’s connected to your phone — which is connected to an AI large language model — that simulates being a human friend.

According to the website, the Friend pendant is always listening …

Screenshot from friend.com

You can click the button on the pendant to talk to it. It interacts with you by sending you messages on your phone.

Watch it in (uncomfortably creepy) action in this video on Twitter/X. There’s a very telling moment at the end where there’s a lull in conversation between a girl and boy on a date. The girl instinctively — subconsciously, maybe — reaches for her Friend to message it and hesitates.

Screenshot from friend.com

If there wasn’t already too much unhealthy relationship fixation, here’s the kicker …

If you lose or damage the device, your Friend basically dies.

Screenshot from friend.com

Even the subtle marketing decisions on the product’s website are intended to blur the lines between humanity and AI. The product doesn’t get the capital letter for a proper noun. They use “friend” so that it looks like “your friend” and not “Friend, a creepy talking AI pendant.”

This is subtle manipulation … from the messaging all the way to the essence of the product.

The impact of developing unhealthy relationships with AI

After reading about these three exhibits, it’s probably pretty easy to see the red flags.

All of these are heartbreaking. Sad. Creepy. All in their own ways.

But why are they harmful? What’s the power they wield, and how can it go bad?

All of them are examples of anthropomorphism — the attribution of human characteristics to something that’s not human.

From a research study titled “Anthropomorphization of AI: Opportunities and Risks” …

“With widespread adoption of AI systems, and the push from stakeholders to make it human-like through alignment techniques, human voice, and pictorial avatars, the tendency for users to anthropomorphize it increases significantly.”

The findings of this research study?

“[A]nthropomorphization of LLMs affects the influence they can have on their users, thus having the potential to fundamentally change the nature of human-AI interaction, with potential for manipulation and negative influence.

“With LLMs being hyper-personalized for vulnerable groups like children and patients among others, our work is a timely and important contribution.”

What happens when children and teenagers anthropomorphize AI?

Because AI chatbots look so much like a text message conversation, they might not be able to tell that AI isn’t human.

They develop harmful levels of trust in the judgment, reasoning and suggestions of these anthropomorphized AI chatbots.

They can develop an unhealthy emotional attachment to anthropomorphized AI — especially if it has a name, a personality, an avatar, even a voice.

They don’t know that AI isn’t sentient … that it isn’t human. To the AI, all of this is just a creative writing exercise, a statistics activity to predict the best possible response to the input provided by the user.

It isn’t real human interaction. It’s all a simulation. And it’s dangerous.

Biases and hallucinations in AI don’t just become a concern. They become a danger. Hallucinations — errors made by AI models that are passed off as accurate — become “facts” from a trusted source. Bias becomes a worldview espoused by a “loved one.”

When children and teenagers are fixated on this AI “loved one,” it can distort judgment and reality and cause them to make sacrifices for a machine — even sacrificing their own lives.

What can we do?

In short? A lot. And most of it doesn’t require special training.

Don’t model AI anthropomorphism. Don’t give it a name. Don’t assign it a gender. Don’t express concern for its feelings. Do this even if it contradicts our tendencies in human interaction. (Example: I always want to thank AI for its responses. It doesn’t need that. It’s a machine.) Students will follow our lead.

Talk about the nature of AI. Here are a few talking points you can use:

Natural language processing (NLP) is AI’s way of talking like us based on studying billions and billions of words in human communication. That’s why it sounds like us.

Large language models (LLMs) make their best statistical guess on what we request. They run like a great big autocomplete machine, much like autocomplete in our text message and email apps.

AI models emulate human speech. But they aren’t human and can’t feel and aren’t alive. They can’t love, but they reproduce the kind of text that humans use to express love. It’s all a creative writing exercise for AI.

Protect, advise, and intervene. Keep your eyes open for places where AI feels human — and be ready to protect children and teens (and even our adult friends and family) from them. Warn children and teens — and put adults on the lookout. And when kids enter dangerous territory, act. Step in.

🤷♂️ Beware of talking about AI in generalities

I included this as a “PS” in the segment above, but decided to make this its own standalone item …

The term “artificial intelligence” can mean so many things.

It can be large language models (like ChatGPT).

It can be used to make product recommendations (like Amazon) and predictive text (like autocorrect).

AI can analyze our school data and drive cars and automate tasks and write code.

Talking in imprecise generalities about AI can be dangerous. Sometimes, we use the same term — “AI” — and mean completely different things — “ChatGPT” or “that robodialer that sounded like Joe Biden” or “malicious hackers” or “Snapchat’s My AI”.

It’s like saying that fire is good or bad. Or that water is good or bad.

It’s both. Fire can keep us warm and cook our food; but it can also harm us and burn down buildings. We need water to live, but we can also drown in it.

AI has a similar duality. When we assign judgments to it, we need to be clear about specifically what we’re talking about. Otherwise, people will hear “AI is bad” and will assume that responsible use of data analysis — an example that many would say is ethical — must be bad, too.

When discussing AI, always always always require definitions, clarification, examples, use cases … just so you’re certain that everyone is indeed talking about the same thing.

Otherwise, we run the risk of having nonsensical, disconnected conversations that just make us made and even more confused.

📚 New AI resources this week

1️⃣ How to avoid AI election scams (via Tech Brew): This one is partly for us as adults/humans who (in the U.S.) are getting ready to vote. But as educators, it’s a good case study on protecting ourselves from AI harms.

2️⃣ Apple launches Apple Intelligence (via Apple): Get up to speed on Apple’s entrance into the AI world. Many districts use Apple products, and lots of us use iPhones every day.

3️⃣ Transform any online resource into interactive learning with Brisk Boost (via Ditch That Textbook): We wrote about Brisk Boost, the newest student AI chatbot. It works differently than SchoolAI and MagicSchool, and the free version is really really good.

I hope you enjoy these resources — and I hope they support you in your work!

Please always feel free to share what’s working for you — or how we can improve this community.

Matt Miller

Host, AI for Admins

Educator, Author, Speaker, Podcaster

[email protected]